Telemetry monitoring in modern space systems, such as Martian rovers and Earth observation satellites, requires the real-time analysis of extremely high-dimensional data streams. These time-series signals are inherently affected by thermal noise and unpredictable radiative variations, making fault detection a critical computational bottleneck. As highlighted in our previous deep dives on on-board decision-making autonomy,interplanetary communication latency forces spacecraft to process and interpret anomalies locally, without waiting for a human feedback loop.

Historically, space anomaly detection has relied almost exclusively on the Reconstruction Error paradigm. Deep learning architectures like TadGAN or Variational Autoencoders are trained to reconstruct “nominal” telemetry patterns, flagging statistically significant deviations as anomalies. Unfortunately, this approach leads to two structural problems: severe overfitting on standard operational data and the inability to recognize unseen contextual faults during training. To overcome this, ground and flight system engineering is migrating toward Zero-Shot Anomaly Detection, a branch of artificial intelligence capable of isolating unprecedented systemic alterations without requiring data labeling or prior training on those specific error signatures.

In this technical article, we will thoroughly analyze the shift toward the Relative Context Discrepancy (RCD) paradigm, focusing on the recent TimeRCD algorithmic framework. As introduced in the foundational research paper from Tsinghua University (Lan et al., “Towards Foundation Models for Zero-Shot Time Series Anomaly Detection”, arXiv 2025), abandoning the indirect goal of data reconstruction in favor of the direct evaluation of discrepancies between adjacent time windows represents a breakthrough. We will explore the vector logic of TimeRCD, its code, and how its application on complex NASA telemetry datasets (specifically SMAP) is redefining the limits of space autonomy.

The Limits of Reconstruction: Why TadGAN Is No Longer Enough

For years, the de facto standard for automated space telemetry analysis has been the paradigm based on Reconstruction Error. Algorithms like Variational Autoencoders (VAEs) or adversarial architectures like TadGAN (Time series Anomaly Detection Generative Adversarial Networks) operate on a mathematical principle that is as logical as it is indirect.

In these networks, the time-series of nominal sensors are compressed into a low-dimensional latent space and subsequently “reconstructed.” The underlying thesis is that a network trained exclusively on routine operations will fail to decode anomalous patterns. Consequently, a high mathematical variance (e.g., Mean Squared Error) between the original input X and the reconstructed output \hat{X} triggers the alarm.

TadGAN, specifically, improved this process by introducing adversarial training, combining generator and critic networks to map the temporal dependencies of hardware logs. However, real mission logs have exposed inherent vulnerabilities in this approach.

The Bottleneck: Overfitting and Zero-Shot Failure

The fatal flaw of generative models in deep space lies in the “reconstruction paradox.” When a rover operates in extreme environments, telemetry streams undergo constant fluctuations due to sharp thermal gradients or Single Event Upsets (SEUs) induced by cosmic radiation. In this context, the classic approach collapses:

- Overfitting on nominal data: Neural networks tend to rigidly memorize the patterns of the training set. A harmless physical variation that has never been observed before (such as a natural drift of a navigation sensor) generates a high reconstruction error, flooding the onboard system with false positives.

- The “Reconstruction Trap”: If the network’s capacity is increased to tolerate noise and reduce false positives, the model becomes hyper-expressive, successfully reconstructing even fault patterns perfectly. This zeros out the variance X – \hat{X}, leading to unreported critical failures (false negatives).

In uncharted operational environments, it is impossible to pre-train a model on every single failure mode. This is why reconstruction methods are unsuited for true Zero-Shot Anomaly Detection. They react poorly to uncertainty, trying to “imitate” the data rather than understanding its logical breaks.

To ensure the decision-making autonomy of an interplanetary vehicle, we need an algorithm capable of extracting anomalies without any prior training on them. This fundamental bottleneck has forced the transition from generative models to metrics purely based on contextual discrepancy.

The TimeRCD Paradigm: Relative Context Discrepancy

To overcome the structural limitations of overfitting and enable true Zero-Shot Anomaly Detection, space research is embracing a radical paradigm shift: abandoning signal reconstruction in favor of measuring discrepancies. This is where the TimeRCD framework comes into play.

Instead of forcing a neural network to predict or recreate the exact value of a temperature or attitude sensor, the Relative Context Discrepancy (RCD) approach evaluates the intrinsic coherence of the data stream. The core principle is that an anomaly, by definition, alters the semantic relationship between recent events and the long-term history of the system.

This approach aligns perfectly with the emergence of Foundation Models for Time Series. By using an encoder pre-trained on vast, heterogeneous datasets, TimeRCD abstracts raw telemetry by extracting high-level features without requiring any fine-tuning on the specific mission logs.

Algorithm Architecture

The architecture of TimeRCD relies on an elegant bifurcation of temporal analysis. The incoming telemetry stream is dynamically split into two distinct representations:

- Local Context: A narrow time window that captures the current and immediate state of the subsystem (e.g., the last 5 seconds of the rover’s wheel power draw).

- Global Context: A much broader time window that acts as an operational baseline, representing recent macroscopic behavior.

Both windows are processed by a shared encoder, which maps the raw data into a high-dimensional latent space. The algorithm does not attempt any decoding. Instead, it calculates the vector distance between the two generated embeddings.

Mathematically, the anomaly metric A_t at time t is defined by the discrepancy D between the latent representations E:

A_t = D(E(X_{local}), E(X_{global}))If the rover encounters unexpected terrain causing wheel slip (a kinematic anomaly), the local context will experience a sharp vector deviation from the global trend. This distance, usually quantified via cosine similarity or L_2 norm, provides a robust anomaly score that is immune to the white noise that typically fools generative models.

Vector Implementation (PyTorch Pseudocode)

For data scientists and mission control engineers, translating this concept into code requires the use of tensor frameworks. Below is a simplified example of extracting the RCD metric in PyTorch:

Python

import torch

import torch.nn.functional as F

def compute_time_rcd_score(telemetry_stream, encoder, local_size, global_size):

"""

Calculates the Zero-Shot Anomaly Detection score based on RCD.

Args:

telemetry_stream (Tensor): Sensor data stream [Batch, Time, Features]

encoder (nn.Module): Pre-trained Foundation model for Time Series

local_size (int): Size of the local context window

global_size (int): Size of the global context window

"""

# 1. Extraction of adjacent/overlapping time windows

# The global context includes the history, the local focuses on recent data

global_window = telemetry_stream[:, -global_size:, :]

local_window = telemetry_stream[:, -local_size:, :]

# 2. Mapping into the latent space (Zero-Shot Feature Extraction)

with torch.no_grad(): # No training/backpropagation required

global_embedding = encoder(global_window) # Shape: [Batch, Latent_Dim]

local_embedding = encoder(local_window) # Shape: [Batch, Latent_Dim]

# 3. Calculation of Relative Context Discrepancy (e.g., 1 - Cosine Similarity)

# A value close to 0 indicates normality, a value toward 1 indicates strong anomaly

cosine_sim = F.cosine_similarity(local_embedding, global_embedding, dim=-1)

rcd_anomaly_score = 1.0 - cosine_sim

return rcd_anomaly_score

# Example of onboard usage: if rcd_anomaly_score > threshold, trigger safe mode.

This snippet highlights the computational lightweight nature of Zero-Shot inference: by disabling gradients (torch.no_grad()) and avoiding the pixel-by-pixel or sensor-by-sensor reconstruction phase, TimeRCD drastically reduces the required clock cycles. This is a crucial advantage for Rad-Hard processors (like the RAD750) operating with extremely limited computing power.

Case Study: Validation on NASA SMAP and MSL Datasets

The theoretical transition to Zero-Shot Anomaly Detection requires rigorous empirical validation. In the aerospace landscape, the public datasets released by the Jet Propulsion Laboratory (JPL) represent the gold standard for algorithm benchmarking.

Specifically, we are looking at the telemetry logs of the SMAP (Soil Moisture Active Passive) and MSL (Mars Science Laboratory) missions, which include the operational data streams of the Curiosity rover. These datasets are fundamental because they contain real, documented anomalies, known as Incident Surprise Anomalies (ISAs), buried in years of highly noisy nominal data.

Performance Metrics (F1-Score and Precision)

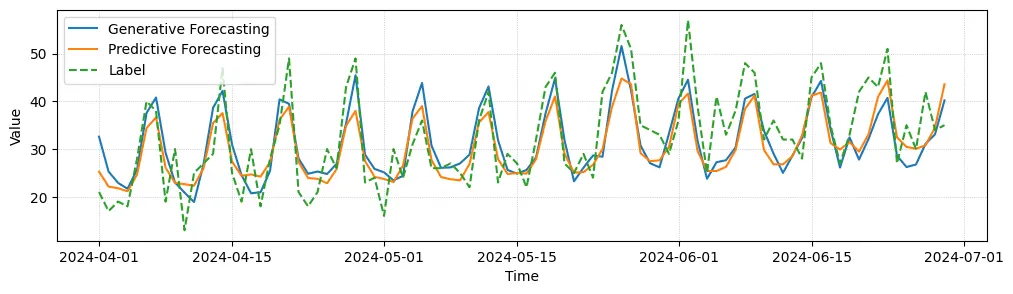

A direct comparison between classic architectures and the TimeRCD framework reveals a stark performance leap. Evaluated on the complex vector channels of SMAP and MSL, the approach based on Relative Context Discrepancy subverts the hierarchies established by generative models.

Looking at the key metrics, the limits of TadGAN emerge clearly. Reconstruction models struggle to surpass an F1-Score of 0.73 on the SMAP dataset, heavily weighed down by a high percentage of false alarms caused by harmless thermal or voltage fluctuations.

Recall that the F1-Score balances the model’s effectiveness using the formula:

F_1 = 2 \cdot \frac{Precision \cdot Recall}{Precision + Recall}In contrast, applying the TimeRCD algorithm in a pure zero-shot scenario (without any fine-tuning on the probe’s specific data), the F1-Score jumps to over 0.86. This increase is driven by a drastic rise in Precision, demonstrating TimeRCD’s superior ability to isolate actual hardware failures without being misled by routine environmental variations.

Practical Results: The Impact on Automated Mission Control

Translating these mathematical metrics into operational benefits means redefining the very concept of automated mission control. The adoption of the RCD paradigm generates immediate systemic impacts for future space architectures:

- Slashing False Positives: TimeRCD’s high precision prevents the unjustified activation of Safe Mode. This drastically reduces the cognitive fatigue of Ground Control, avoiding the waste of precious Deep Space Network (DSN) bandwidth to diagnose false alarms.

- Reduced Computational Load: By not having to generate and reconstruct extremely high-dimensional data arrays, TimeRCD lightens the system buses. This efficiency allows inference to be executed directly on legacy Rad-Hard CPUs (like the RAD750) or space-qualified COTS components.

- Extreme Interplanetary Adaptability: Being a native zero-shot model, the exact same algorithm used for the orbital cycles of an LEO satellite like SMAP can be transferred to the navigation computer of a Martian rover, wiping out the costs of re-training and data labeling.

The Future of Autonomous Mission Control

The integration of Zero-Shot Anomaly Detection through algorithmic frameworks like TimeRCD is not just a software update. It represents, in fact, an ontological break in how space systems engineering conceives onboard intelligence. Abandoning the illusion of being able to mathematically “reconstruct” every possible nominal scenario means accepting, and proactively managing, the intrinsically chaotic nature of deep space exploration.

As a researcher in space robotics, I find this transition from pure determinism to vector discrepancy analysis profoundly fascinating. For decades, in terrestrial laboratories, we have tried to hard-code and predict every single failure mode using rigid decision trees. Today, we are teaching our machines to perceive the “cognitive dissonance” in their own health status, relying on the logical abstraction of Relative Context Discrepancy rather than human intuition.

This is the true prerequisite for autonomy. When future missions like Dragonfly navigate the dense skies of Titan, or when cryobots descend beneath the icy crust of Europa, communication latency will be measured in hours, no longer in minutes. In that absolute operational darkness, the vehicle’s survival will not depend on a flashing alert on JPL monitors, but on the cold, silent, and autonomous evaluation of a metric A_t.

The algorithmic umbilical cord to Earth is finally breaking. And that is exactly what interplanetary robotics needs to take its next giant leap.

Lascia un commento